Learning and Experimenting with Creative Coding Having AI as my Tutor

How did generative IA help me to translate some interactive visuals imprisoned in my mind?

Since last year, when AI services became more accessible and understandable by non-IA specialists, I’ve been trying to understand how these tools could help me in my creative process. Text-to-image tools like Midjourney were my obvious first choice to explore in generating design alternatives. Still, these last few days, I've been experimenting with something more unusual: learning creative code.

One of my top three frustrations as a designer is not having code skills. In different phases of my professional journey, I tried to learn some languages like Phyton, Ruby on Rails, and even PHP after hearing a lot about the importance of designers learning code to better communicate with developers. But all of them were solo attempts, with no clear objectives in my mind and no support to understand whether I was doing well. I know the basics now, but after two or three console errors, I start to cry in a fetal position and wonder why I’m the dumbest person alive (such drama!).

It changed a little when I discovered Processing — and its posterior adaptation for web P5.js — open-source languages created with beginners, designers, and artists in mind and focused on prototyping and coding with a strong emphasis on visual and interactive media. “Yeah, baby, it’s my time to shine,” I thought.

(cut the scene to me, crying in a fetal position, but with some random lines and colored circles scattered around).

I struggled a lot to have patience and persistence first to do some simple sketches, learn the basics, and create dull pieces that taught me how to structure my code and how I could adapt some of them to create more complex and fun projects. In the end, when some idea came to my mind, I was always calling

, our creative technologist at Odd.Exp to help me on how to build these ideas.I was feeling frustrated about being so dependent. I need some autonomy to feel creative and capable. Why can I tell P5.js, “Hey, listen: I want to create a sketch that captures the image of my webcam, apply a grey filter on it, and change the filter's color based on how loud I’m screaming in my microphone.”? and tadahhhh, the code is magically there. Yeah, I know. I’m nuts, right?

Right…?

Hey, ChatGPT, let’s talk a little, please.

ChatGPT as my personal coder.

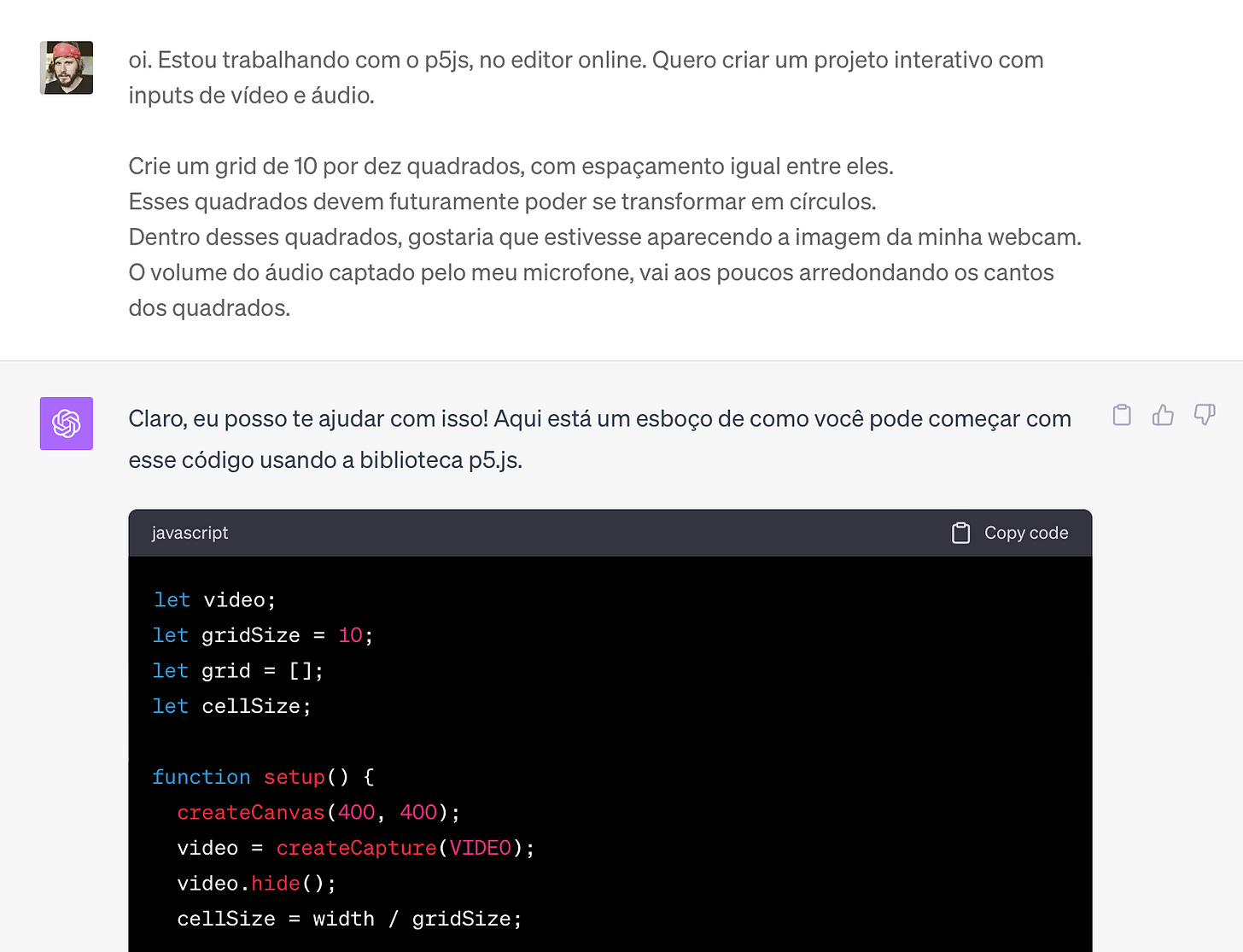

Generative AI is becoming ubiquitous. When I learned that many developers were using ChatGPT or other tools as Co-Pilot to write better code, I wondered if I could bring to life all those little visual ideas that I’m always sketching in my notebooks. I did not plan much on how to do it or search for “the best prompting codes to p5.js” or something. I just opened ChatGPT and talked to it like this:

Hi! I'm working with p5.js, in the online editor. I want to create an interactive project with video and audio inputs.

- Create a grid of 10 by 10 squares with equal spacing between them.

- These squares should be able to be transformed into circles in the future.

- Inside these squares, I would like the image from my webcam to appear.

- The volume of the audio captured by my microphone gradually rounds off the corners of the squares.

ChatGPT happily answered me, saying, “Of course I can help you, my noble man; here it is, your code!”.

I copied and pasted that whole chain of commands into the P5.js environment, barely handling my anxiety to finally see my work of art alive and:

Ok, we are starting with the left foot. And ChatGPT told me even more:

Unfortunately, the p5.js online environment does not support audio capture directly, but if you are using it in a local environment, you can add the following code to setup():

Wait a minute. I know I'm not a good developer, and you’re good enough to pass the Uniform Bar Examination, but even I know that P5.js supports audio capture. C’mon! I told them that it was not true and the console output. An excuse and a new code were generated, and voilá!

Yeah! It’s happening! With this first visual working, I noticed that a 10x10 grid is too much for this canvas size — the performance was horrible —, and the microphone inputs were not changing the radius of the corners of the squares, as solicited in my first message. I know some basic structure of P5.js codes, so I changed some parameters and noticed the microphone inputs were working, but the video was not affected by the shape of the squares. I told it to ChatGpt (new excuses by it) and:

After getting it right, I felt that I was not just throwing some ideas and copying/pasting the code given by the AI, but I was having a conversation with this tool, co-creating with it. Sometimes, I knew what was wrong and explained what to do to have it right. However, I just had a slight idea of what I needed but no idea how it could be done — to improve the performance of the sketch, for example.

Yes, one way to capture fewer frames and, therefore, improve performance, is to use the frameRate() function, which defines the number of frames that will be drawn per second. By default, p5.js tries to run at 60 frames per second, but you can reduce this number to suit your desired performance.

Add the following line at the end of the setup() function:

[…]

Understanding our interaction as a conversation made me realize that the best way to improve my sketch and learn in the process was by asking for little changes and adjusting the code with ChatGPT step by step. In the video below, you can see all the results of each phase of the process (sorry but not sorry to all classic music fans):

I had so much fun interacting with chatGPT, getting the results, and thinking about slight changes and experiments. Some of them were totally non-expected, mainly because I faced some difficulties in explaining visual aspects or interactions to AI. They were crystal clear in my head, but I lacked the vocabulary to describe them in detail to the machine. Sometimes, I described it well; sometimes, my poor description was confusing, and the ChatGPT translated it in a strange way. Frequently, the results were far away from what I asked, but they looked very cool on the sketch!

Learnings

I know, I know. The visuals I produced aren’t any work of art, and I probably would create some just by studying and being patient. But, I need to say that it was the second time I felt really empowered by generative IA tools (the first one still was creating stunning images with Midjourney). I’m not the “move fast, destroy things” kind of guy, but the speed at which I generated, modified all of these p5 sketches, and understood some of the logic of the code that I had a hard time understanding by myself baffled me. And I think I learned some stuff in this process.

Little by little: I got the best results from ChatGPT asking for simple modifications in my sketches. Whenever I asked for more than one complicated thing, the bot caused some confusion and gave me some code. I needed help understanding what was going on. It is crucial too to i) better understand how each code modification affects your sketch and ii) generate new ideas through the process of design.

Visual Vocabulary: this exercise showed me how limited I’m facing the challenge of describing graphical elements, aspects, and interactions that I was trying to embody in my sketches. Learning words, concepts, and even specific techniques to create images can be a great way to imagine and describe what’s going on in your mind, not just for chatGPT but for everyone else you work with. Maybe this tip will be useless when multimodal models are accessible to everyone, but I like to believe that expanding the ways you describe the world around you is an essential skill to be more creative.

Ask for an understandable code: Remember to ask, in your first interactions with the LLM, to ask for explanations and ideas, like “When you suggest the code to me, put comments in each block so that I understand what is being done. Suggest creative ideas for other things that can be incorporated into the piece.”. This is great when you want to learn the logic of code and don’t know how.

And if everything went wrong?: Some things will not work. Usually, development environments present the errors of the code on the console, and you copy and paste the error in the chatGPT so it can give you the correct code (Bard is not capable of doing this yet, but this is a topic of discussion for another post). Sometimes, it’s not an error, but the sketch is behaving strangely, and you need, again, to access your own vocabulary to explain to the AI what is happening and what you were expecting to see in your sketch. And, you know, there are some situations when you give up and call

to help you clean the mess and help you understand why your laptop is on fire.Yeah. You still need to know how to ask for help from humans: Understanding the code is really necessary to do really fantastic stuff, and I believe that much of the creativeness that emerges from creative coders is based on their knowledge of how to use code as a material for design. The AI is powerful, and it’s a game-changer in a lot of ways. But having someone on your side that understands you and how you think is still very important.

Unfortunately for English readers, I talked with chatGPT in Portuguese, but if you’re patient enough to translate, the whole conversation is here.

Next Steps

I’m really excited by these first interactions and by the result generated. In future studies, I will try to create more complex and beautiful visuals and interactions. Furthermore, I’m very curious about how the multimodal functions on Chat GPT — the ability to see pictures, for example — could change and improve my conversations about creative coding with it.